Legacy Applications – How did we get here and what can we do?

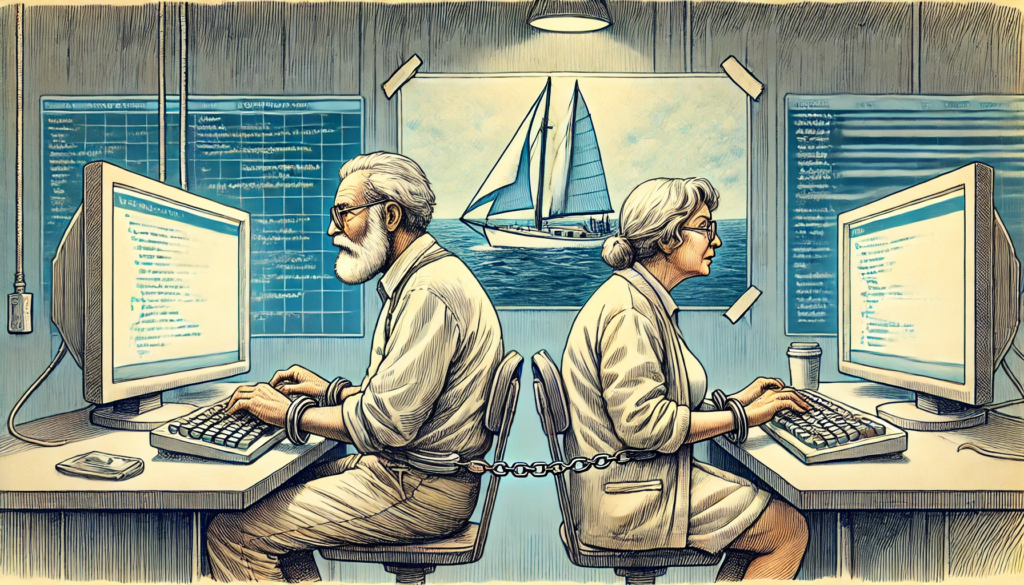

In the early part of 2007, I was building a fast growing digital transformation agency and found myself talking to a potential client who was teetering on the edge of a crisis. A significant chunk of revenue in one of their business units was completely reliant on a legacy application with an ancient technology stack. Nearly all the original team that developed the app had long since departed. Just one person remained and the inexorable march of time was now taking its toll. Retirement beckoned with the additional lure of a 6 month sailing trip of a lifetime.

Resistance was futile and who could blame them?

The threat to Business Continuity (the ability to keep our critical operations running, even during major disruptions) was stark for this organisation and they had no easy solutions in sight.

Unfortunately, this was not an isolated incident and whilst the details are always painful and unique, the underlying journey is often very similar:

In the distant past, a talented team used cutting edge technology and responded brilliantly to the business need to create an app that encapsulated everything necessary to capitalise on some opportunity or avoid some threat.

Time passed, the team responded to business changes and the benefits continued to flow. A “new normal” emerged.

But… the “business as usual” changes could not generate the same level of excitement for the development team and gradually they started to slip away to pick up new opportunities and learn new technologies. The loyal few who remained were rewarded with larger than average pay increases and eventually we are down to just one or two people who find themselves in a technological fur lined rut with no prospect of escape.

Theirs is not to reason why, theirs is but to keep the application running and respond to business changes as they arise!

Weeks turn into months and months into years. But nothing lasts forever and eventually our business continuity is subject to a massive risk.

If we’re lucky, the heightened risk will have been picked up as part of the audit process in which case there is no alternative but to tackle the problem.

In most other cases, it becomes a problem that’s extremely difficult to solve because it appears to lack urgency. That’s until a life changing event catalyses the organisation, forcing it to find a solution and find it quickly!

In this blog, I’m not going to offer platitudes about “fixing the roof while the sun shines”, and it’s not technical advice about the pros and cons of “moving to the cloud” or “building a React web app”, rather I’m going to address the pragmatic choices we can create if we find ourselves stuck in the blast zone of this increasingly common demographic time bomb.

So, at a high level, what sensible options might we have (these options aren’t mutually exclusive, rather they are slice, dice, mix and match!)

- Educate ourselves

- Create a risk log

- Create options to mitigate and in full knowledge, accept the risks

Educate ourselves

Ourselves? Who do you mean exactly?

All the stakeholders.

Not sure who they are? Run a small exercise:

If the system became unavailable for an extended time:

- Who would start to shout?

- How quickly?

- Why?

- Really why? Keep asking the “why” question until you’re confident that you understand the root cause behind their potential unhappiness

Once you understand the list of stakeholders and their expectations, you can think about the education process. What questions should they understand the importance of and and have good answers to. And remember, just because we started to think about this problem because of a potential issue with a team member leaving, it’s almost certain that’s not the only thing threatening your business continuity.

Before we can start to think about potential escape routes,, there is some further digging we can do to educate ourselves and a light pass over these questions at the beginning can bear significant fruit

- How has the use of the application changed over time?

- How has the business changed over time?

- How has the application landscape changed over time?

- What can we learn about how the application is actually used from the existing log analysis

- Is there any further instrumentation that we could add to throw light on what we actually do today?

- How difficult would it be to add each potential piece of instrumentation

- How much value could we create by adding that piece of instrumentation

Consider the landscape and what other movements are afoot in your organisation

- Are there some new systems coming that could mitigate the need for this system?

- Are there some existing systems that could be repurposed somehow to mitigate the need for this system?

- Are there some new processes that could mitigate the need for this system?

Create a risk log

We can definitely sleep more easily if we have systematically created then maintained a register of the risks that we face because of this unhappy situation

Here’s a starter list of potential questions:

- Why should we care about business continuity for this application?

- What risks should be on our radar that could threaten our business continuity?

- How much could they affect our business?

- How likely are they to occur?

- How quickly could they occur? Consider:

- Creeping risks

- Galloping risks

- Light speed risks

- What events could increase or reduce the likelihood, severity and speed of onset

Create Options and in full knowledge, accept the risks

In general, we are a bit flawed when the situation requires us to create a list of 5 or so good and legitimate options so we can pick the best and then act

I remember reading an analysis a few decades ago where they found a common pattern was:

- Come up with an initial idea

- Knock the rough corners off it

- Become invested in that solution

- Come up with some obviously worse alternatives

- Choose the initial idea and consider it a job well done!

It’s a very easy pattern to fall into and in my experience it takes conscious effort to avoid it!

For starters, you could use this list as seeds for some more solutions:

- Recruit or train more people

- Outsource your problem

- Full technology Refresh

- Partial Technology Refresh

- Gradual transformation

- Refactoring the application

- Front end – back end separation of concerns

- Application landscape refactoring

- Something else

To conclude

To state the obvious, things are ok until they’re not ok. And we’ve all been blindsided at some point by something becoming “not ok” when we didn’t expect it.

Of course it would have been better to have done an application landscape analysis and have been better prepared!

But life isn’t always like that so if we can think in advance how we’ll handle the crisis when it does jump out on us, our chances of recovering well increase dramatically.

At a high level, remember:

- Educate ourselves

- Create a risk log

- Create options to mitigate and in full knowledge, accept the risks

If you’re facing an issue like this in your business and you’d like to explore some options, please give me a call.

Peter Brookes-Smith

Curious problem solver, business developer, technologist and customer advocate

Other blogs by Peter

- Under the Tarpaulin Something Lurks

- Sign here…

- Needles and haystacks or…

- The Case of Rev. Bayes v The Post Office

- Lighting a fire – Our first annual review…

- Helping Mine Detectors learn to use their equipment correctly

- How many?

- Finding defects with AI and computer vision

- Portfolio: PBS – Neural Net for Hand Written Digits

- CS50 – Harvard’s Open Computer Science Course

- What is a neural net anyway?

- Values Driven Business

- All things come to those that wait…

- Monte Carlo or Bust!

- What is business agility? And why should I care?

- Are values in business our fair weather friend?

- Lessons in life from an ai agent

- Five tools for innovation mastery

- Value for money

- Award entry for European CEO Magazine 2017

- Darwin and The Travelling Salesperson

- What is this DevOps thing?

Blogs by other authors:

- From Stubble to Squad Goals: Our Mo-numental Mo-vember Mo-arvel!

- Learning a Foreign Language vs. Learning to Code: What’s the Difference?

- Solving complex problems through code – and nature!

- In it together – why employee ownership is right for us

- Old Dogs and New Tricks: The Monte Carlo Forecasting Journey

- Portfolio: Rachel – Photo Editing

- Portfolio: Luke – Hangman

- Portfolio: Will – Gym Machines