On a misty morning in early October, I left for work in the car. The blowers were on full blast as Greg James’ Radio 1 breakfast show had begun. As I left my estate, the music turned to noise as I began going over yesterday’s lesson with Peter (DT Squad Founder) in my head.

We had been discussing genetic algorithms – a machine learning technique based on Darwin’s theory of evolution. These can be applied to complex problems to generate optimised solutions. It’s awesome and if you’re interested in how they work – Peter blogged about it here a few years ago.

The road followed down a hill into a right hand bend. My mind shifted focus, from algorithms to two pheasants, both perched in the middle of the road side by side. I didn’t have time to slow down or stop, so I let fate run its course. The right-hand pheasant took flight a split second before the other, earning itself another day to live as it glanced off the windshield. The other pheasant wasn’t as lucky. With an audible thud, I watched its body tumble to the side in the rear-view mirror.

I had just witnessed Darwin’s key principle of evolution play out brutally before me – natural selection! I (and the car) introduced a danger into a population (2 pheasants). One survived and one perished. The pheasant that survived might have been genetically superior. Faster reaction time, faster FPS (flaps per second), or it might have just been lucky. Either way, it was able to go and reproduce, with the hopes of passing on these beneficial characteristics to its children.

Okay, get to the point of the blog already! Why the sudden interest in genetic algorithms again?

Well, DT Squad has a SaaS product that’s due to launch in November. The product is a game with one goal: make as much profit as possible. How you accomplish that is down to the team that plays. There are countless paths and decisions that can be taken – all revolving around a simulated market economy.

This is where the genetic algorithm comes in – can it help in identifying the optimal strategy that generates the most amount of profit?

And, can someone like me – who has no previous machine learning experience, learn and develop a genetic algorithm from scratch to solve (in my mind anyway) this big, meaty, good-luck-explaining-this-to-your-dad problem? I’d like to try!

Basics first.

If you read Peter’s blog then you’ll know about the Travelling Salesperson Problem (TSP). Genetic algorithms work extremely well on it hence why it’s used to introduce the topic. The outline of the problem is as follows:

- A salesperson must visit all the cities in their area exactly once.

- They must start and end in their home city.

- Calculate the optimal route that minimises the distance travelled.

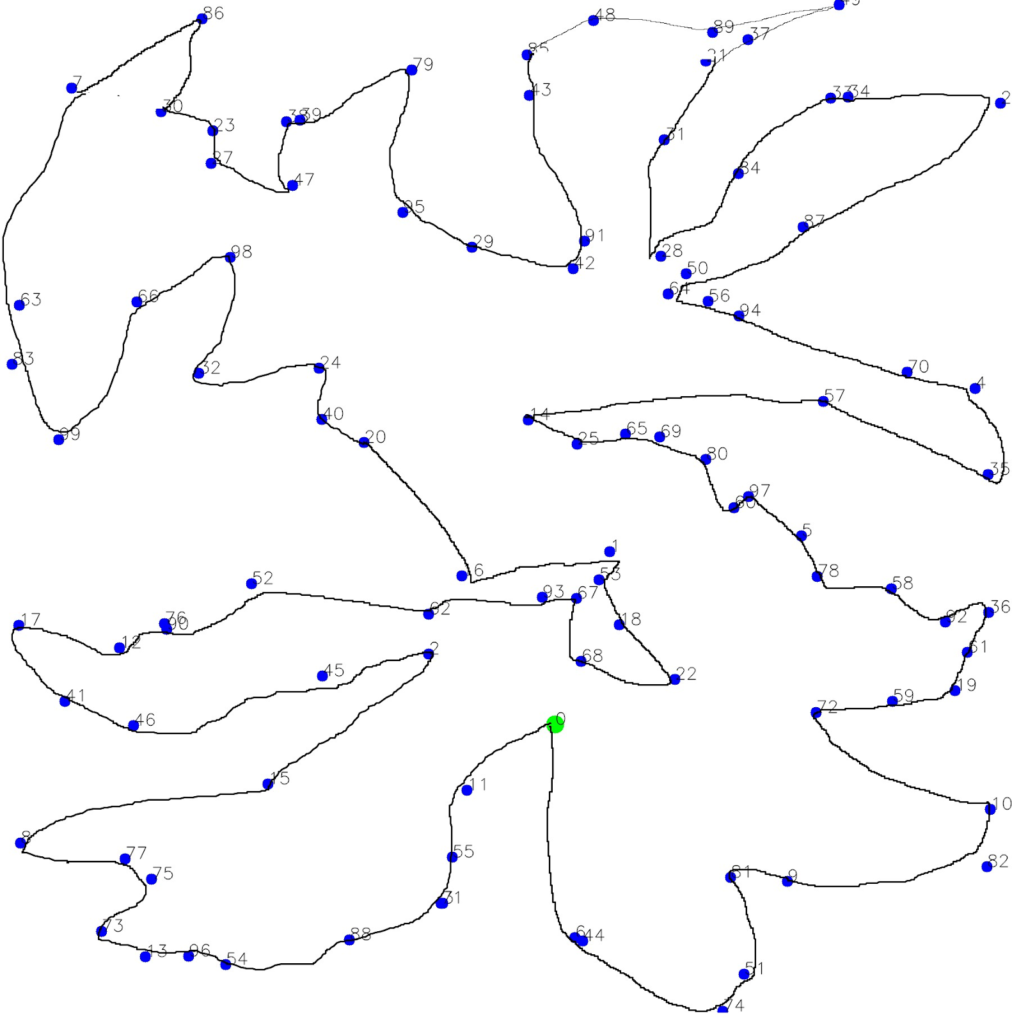

Over the course of a couple weeks, I hashed out a solution to the problem that worked well enough. Below shows the algorithm working for 100 cities.

Figure 1: Genetic algorithm working on 100 city locations in the TSP

Now it was no good stopping there – I had to push the boundaries further. Instead of 100 cities, what if we had 1000, or 10,000? The computational power required to solve this would be massive!

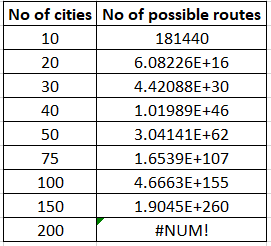

The total number of possible routes is calculated using the equation (n-1)!/2 where n is equal to the number of cities. Each city that’s added increases the total by a factorial – which grows much faster than something that increases exponentially (something you’re probably familiar with). As you can see from below with 200 cities, the number is so large that excel registers it as a number error!

Table 1: Number of distinct routes available for number of cities in the TSP

The algorithm that I’d put together was currently ill-equipped to tackle problems of this scale. Sure it could work for 100 cities, but for 10,000? Not a chance. Well not unless you’d like to wait and watch the Lord of the Rings extended versions back-to-back (That’s 11.4 hours worth for those wondering!) multiple times over.

Now this is where Peter asked if I could look into human injection and its effect on genetic algorithms. I mean, how much of nature hasn’t been affected by humans?

Take my car for example – I don’t think many predators roaming around the Wiltshire countryside come close to the stealth-grey beast that my Nissan Qashqai posed to those 2 pheasants. If I hadn’t come along, both pheasants would be free to procreate, possibly leading to a less optimal change in the pheasant gene pool. With my car, we were able to swiftly remove ‘less-fit’ lives and leave the good ones.

This is exactly what we were going to do with The Travelling Salesperson problem. Instead of the genetic algorithm relying on randomness to generate better lives, we would intervene and inject a more optimal route using our own human intuition. Kind of like dropping a genetically modified superbird into one of the Galapagos Islands.

Firstly, I initialised a blank canvas of city locations, marked on by blue dots (ie a map!) with city numbers on. From the starting city, I then noted down the city numbers in the order of what I thought the most optimal route was. This list of cities was then fed back into the start of the program. To ensure that the best route was never lost between generations (called elitism) I saved a global variable that contained information about the best route found so far.

When I tried this with a low number of cities – it was almost intuitively clear what the best route was. The algorithm struggled to find a better solution. However when I increased the number of cities to 100, the route started to become more complex and it wasn’t as obvious.

Below shows my initial route using MS Paint, and the genetic algorithm working on improving it.

Figure 2: My chosen route for 100 cities in the TSP. Distance: 9.03

Figure 3: Genetic algorithm working on the TSP for 100 cities using my route as an initial input. After 440 generations, distance: 8.4

Comparing Figures 1 and 3, it is clear that in Figure 3 the algorithm tends towards a more optimal route faster. This is as expected since we introduced a more optimal route to begin with. I could have tried this with 200+ cities, but I think you get the point!

Remember when I spoke about the SaaS product DT Squad was working on? The game that tests teamwork, leadership, logic, problem-solving? Well, as a recap, the aim of the game is to generate as much profit as possible by making a series of decisions based on a simulated business environment. Each playthrough of the game has a different environment. This means that the optimal decisions change each game, and with it the maximum amount of profit that can be made. At present, we had no way of working out the best strategy for each runthrough.

Since the genetic algorithm I had built took a high level approach that abstracted the problem away from the method, I was able to quite quickly fiddle around with the parameters, so that instead of calculating the distance of the TSP route (and trying to minimise the distance), I would be calculating the profit made throughout the game (and trying to maximise it).

This is where we hit a roadblock. Whilst a runthrough of the TSP took a fraction of a second, a runthrough of a full cycle of the business simulation game took a whopping 11s. If we were going to model 300 lives and have it cycle over 500 generations, this would take over 500 hours to complete.

However, that’s with synchronous programming. What that means is that each line of code runs one after the other. One cannot start without the other finished. That’s like saying out of the 300 lives, one life cannot be born at the same time as the other. This is where asynchronous (or parallel programming) comes in. A life doesn’t need to wait for another to be born, it doesn’t wait in nature and it won’t wait here!

Python inherently doesn’t allow true parallelism due to the Global Interpreter Lock (I shall not explain this here) but some libraries allow you to bypass it, such as the multiprocessing library. Using it allowed me to harness the full power of my CPU, allowing me to spawn multiple threads (basically the things that run in parallel) to work on the Darwinian process.

Now I didn’t want to stop here. I really wanted to emulate nature. And having just watched (again!) David Attenburgh’s Planet Earth I knew all about the Galapagos islands, and how whole species of animals, whilst so close together to other islands, were so inherently different. However, what if a species of animal evolved so that it could swim the seas or cross the skies all the way over to another island? It would be able to procreate and inject its genes into that population, possibly creating more genetically superior animals able to swim further and fly farther to more islands.

I emulated this behaviour in the following way:

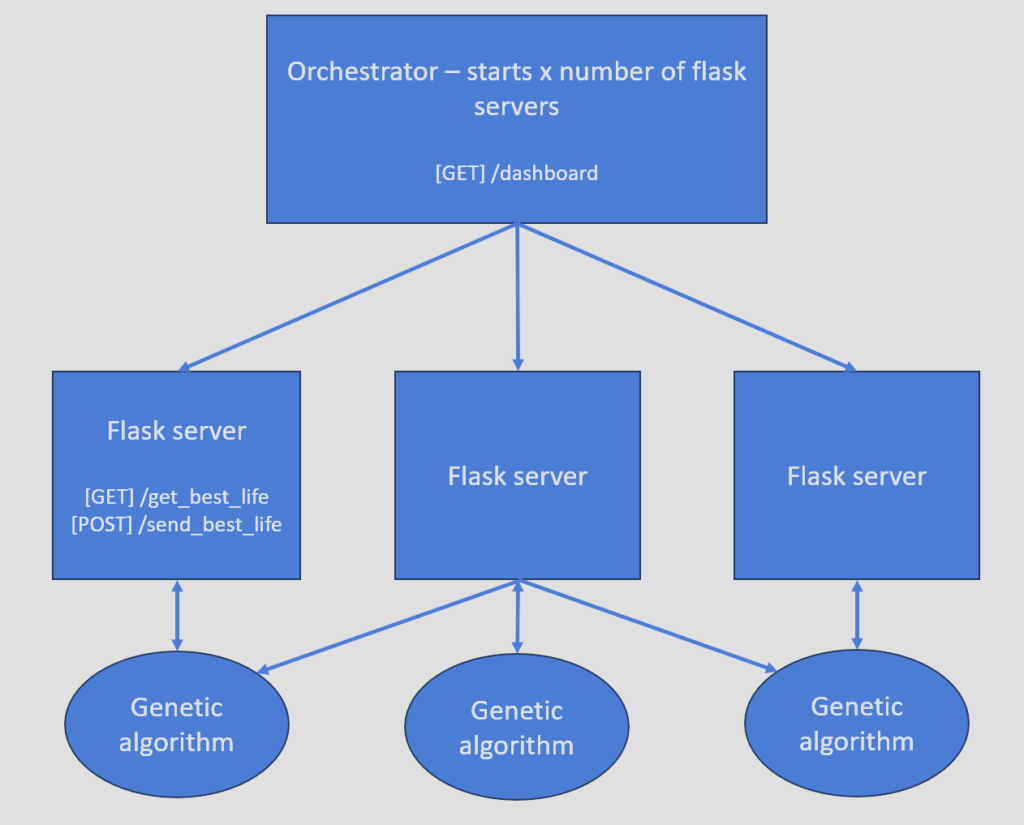

Islands would be represented as a server (I used Flask for this). Each server would generate a population and run through the Darwinian process. The servers would all run separately from each other, replicating the Galapagos Island situation. Each generation, there would be a small (0.0085% chance) that the best life of that generation would make it over to another server, and inject itself into that population. The diagram below hopefully demonstrates this!

The orchestrator is yet another server that allows us to see a high level overview of the whole situation, to see who’s doing best. Kind of like if there were a being sat in the sky, orchestrating the whole process…!

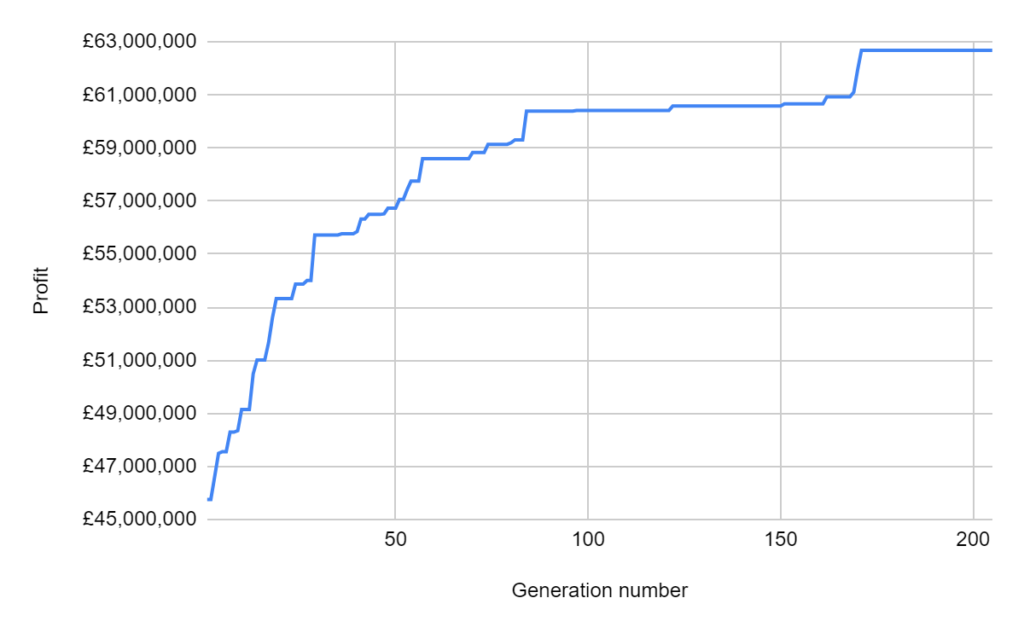

What this would now allow us to do, is set up multiple computers, running multiple servers, all with the common purpose of trying to find the optimised solution to the game! We let the program loose on Peter’s thread ripper CPU, and sure enough, the profits in each generation began to increase! We let the program run over a few days (as it was still only running on just one computer) and we found that the solutions that it was producing was in line with the game scenarios (if you are wondering about the randomness of the games, I implemented seeding so that each game would generate the same randomness).

Success! Below shows the graph of the profits generated each cycle.

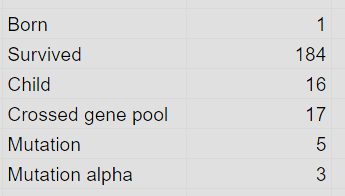

Table 2: Winning population sequence of events

To summarise:

- I learned about a machine learning technique and implemented it from scratch starting from the basics.

- I applied my knowledge to a hard, real world problem and came up with good…maybe great? solutions (we will never know if they are optimal).

- I pushed the boundaries of my knowledge on the Python language and asynchronous programming and learned a lot!

What I would do differently next time:

- Learn and use a low level language like C++ or Rust to run the game, so that each run through could be many times faster.

- Implement a different machine learning strategy. A good candidate would be reinforcement learning (here’s another blog on that). I have a hunch that if this was implemented, then the algorithm could generate optimised run throughs each time it played the game.

What next?

- I really enjoyed writing this piece. I may write some more. Feedback welcome!

- I will continue to push the boundaries of my knowledge in other areas!

If you have managed to get this far… then I salute you!